Human Motion Retargeting to Pepper Humanoid Robot From Uncalibrated Videos Using Human Pose Estimation

Abstract

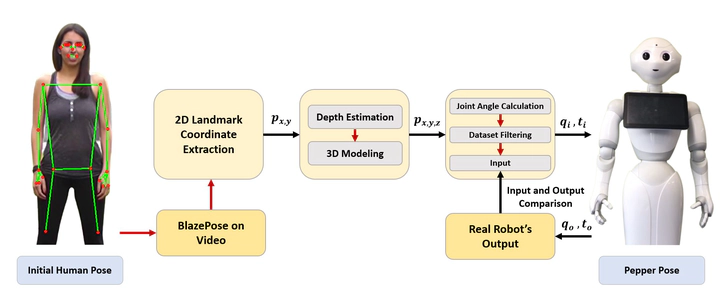

Human motion retargeting to humanoid robots (i.e., transferring motion data to robots for human imitation) is a challenging process with many potential real-work applications. However, current state-of-the-art frameworks present practical limitations, such as the requirement of camera calibration and the implementation of expensive equipment for motion capture. Therefore, we propose a novel framework for motion retargeting based on a single-view camera and human pose estimation. Unlike previous works, our framework is cost and computationally efficient, and it is applicable both on prerecorded uncalibrated videos and web-camera live streams. The framework is composed of three modules: 1) 2D coordinate extraction from the integrated Google BlazePose, 2) 3D modeling by depth estimation using a geometrical algorithm, and 3) human joint angles computation and input process to Pepper robot. Pepper’s imitation accuracy is evaluated qualitatively by direct motion similarity observation and quantitatively by comparison between output and input motion data to observe the effect of Pepper’s physical limitations. Results suggest that our proposed framework is able to reproduce human-like motion sequences, however with some limitations due to the hardware.